Dear Chris:

I added a componet T for temperature and modified vR and vJ, but I am uncertain about the derivation of residual and Jacobian.

the equations are:

Here I put the code:

macro def(u)[u, u#y, u#T, u#p]//

macro grad(u) [dx(u),dy(u)]//EOM

macro dot(v, u) ( v * u + v#y * u#y + v#T * u#T ) //EOM

macro div(u) ( dx(u) + dy(u#y) ) // velocity divergence

macro ugradu(v, U, u) ( v * (U * dx(u) + U#y * dy(u)) + v#y * (U * dx(u#y) + U#y * dy(u#y)) ) // scaled convection term

macro visc(v, u) ( dx(v) * dx(u) + dy(v) * dy(u) + dx(v#y) * dx(u#y) + dy(v#y) * dy(u#y)) // EOM

varf vR(def(dub), def(v)) = int2d(Th)(ugradu(v, ub, ub) - div(v) * ubp + visc(v, ub) * nu - vp * div(ub)

+ grad(ubT)' * [ub,uby] * vT+ Kappa * grad(ubT)' * grad(vT)- ubT * vy) //newly added

+ on(1,2,3,4, dub = ub, duby = uby) + on(1,dubT=ubT-1) + on(3,dubT=ubT);

varf vM(def(dub), def(v)) = int2d(Th)(dot(v, dub))

+ on(1,2,3,4, dub = ub, duby = uby) + on(1,dubT=ubT-1) + on(3,dubT=ubT);

varf vJ(def(dub), def(v)) = int2d(Th)(sig * dot(v, dub) + ugradu(v, ub, dub) + ugradu(v, dub, ub)

+ Kappa * grad(dubT)'* grad(vT)+ grad(dubT)'* [ub,uby] * vT - dubT * vy//newly added

- div(v) * dubp + visc(v, dub) * nu - vp * div(dub)+epsp * dubp * vp)

+ on(1,2,3,4, dub = ub, duby = uby) + on(1,dubT=ubT-1) + on(3,dubT=ubT);

Is there anything wrong with my varfs? And how to add a disturbance to ub[] to induce the startup? I tried to add a disturbance to ub[] but failed.

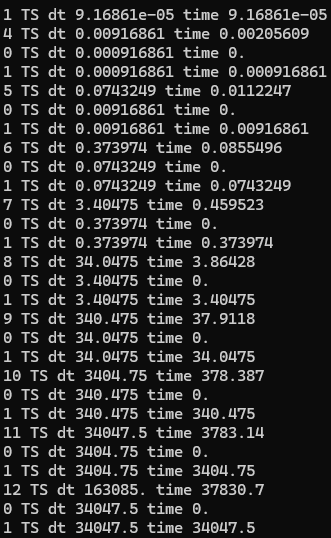

Also, I tried to run it to t=100, but stopped at step 962, what’s the problem? Should I adopt a finer mesh?

I monitored the temperature field, it looks reasonable.